“The Kicker” for Developers: AI Assistant for Project Management

A typical day of a project manager at a development company involves continuous immersion in work chats, constantly chasing deadlines, and extensive communications. The management of ITEXUS, a full-cycle IT production company, decided to automate many of these tasks for their project managers by introducing a virtual assistant.

About the Client

The company specializes in complex custom development in e-commerce, insurance, and fintech, implements digital projects, and creates web services and mobile applications. The production team includes outsourcing and outstaffing specialists involved in IT projects at various stages: frontend, backend, testing, debugging, and configuration.

Project Background

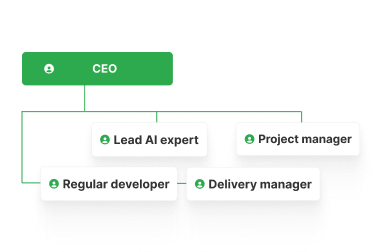

The idea of creating the bot wasn’t spontaneous — it was born out of the project management routine experienced by the project managers. The company scaled up development across all its IT projects and started creating an AI assistant, NOXS.AI, capable of tracking project data, monitoring deadlines, automatically sending reminders to executors, and gathering necessary metrics. The development involved: CEO, Lead AI expert, Regular developer, Project manager, Delivery manager.

Project Team

Engagement Model

Fixed Price

Tech stack

Phase #1: Prototype Launch

We tested each stage of AI-agent creation internally. Initially, we needed to verify the hypothesis — whether sufficient information remained in digital traces.

A prototype (PoC) was developed for this purpose. We exported all data from Jira and Confluence, uploaded it into a locally deployed LLM (minimizing leak risks), and started asking it questions based on the materials provided, such as typical project management inquiries:

-

“How many tasks were delivered?”

-

“How many were not delivered?”

-

“Are there tasks exceeding estimated time?”

-

“How many tasks are assigned to a particular developer?”

-

“Calculate KPI,” etc.

The large language model analyzed the data and provided responses—this was the bot’s only function at this stage. The prototype launch enabled us to test the “Deadline Control” module and explore the potential of using RAG (retrieving information from both internal and external sources).

We mitigated the main risk of using a generative model — providing inaccurate answers. Persistent testing, along with evaluations by actual project managers, testers, and regular users, was essential. Various questions were asked, and semantic errors were identified, prompting system adjustments and retraining.

Working on the PoC took four weeks, including one week dedicated to training the language model for quality data analysis and accurate response delivery.

Phase #2 Creating a Minimum Viable Product

Once the PoC was ready, we proceeded to develop an MVP (Minimum Viable Product), which included a separate information processing module. While the prototype’s language model processed data exported from Jira and Confluence, the MVP connected directly to these platforms, capturing changes in real-time.

We also integrated the codebase from GitLab. Now, the system could not only monitor and answer questions but also trigger five manually implemented rules by programmers when specified conditions were met.

Examples of such rules:

-

If a developer creates a task in Jira without a description, a message should be sent to the general chat tagging that developer after one hour.

-

If a task in GitLab shows no updates for an extended period, a notification should be sent to the responsible person.

However, difficulties arose with configuring SQL queries to the database via the agent framework. To resolve this, we examined the framework code, identified the issue source, and adjusted the input settings.

In addition to these improvements, the MVP introduced a single reporting function. For testing, we chose SWOT analysis. Reports were generated by the LLM and structured into four lists by the bot: project strengths and weaknesses, opportunities, threats

Development and testing of the MVP took about 1.5-2 months, with 1.5-2 days dedicated to training the system for SWOT analysis. Significant productivity improvements were noticed within the first two weeks of using the MVP internally.

Phase #3: Expanding Functionality to AI-Agent

The third phase transformed the assistant from MVP into a market-ready product. Implemented improvements:

-

Expanded the number of projects the assistant could handle. Previously, the bot analyzed data from just one project in GitLab, Jira, and Confluence — it now covers multiple projects simultaneously through an interactive Set Up within chats.

-

Added capability to create rules directly from text prompts. Project managers could specify, for instance, “If a task description isn’t provided upon creation, notify me after one hour,” or “If no description appears within an hour, notify the CEO after two hours,” and so forth indefinitely.

-

Added the option for individual chat communication with the bot, enabling rule creation that involved sending messages to general chats or privately.

Example scenario:

-

“If a developer forgets to provide a task description, send them a private notification.”

-

“If there’s still no description after an hour, notify the project manager.”

-

“If another hour passes without a description, send a message to the general chat.”

We faced challenges generating executable code from user text requests to verify rules — specifically a field within the data structure. Extensive experimentation and numerous prompts were required. Additionally, an isolated infrastructure was used to execute generated code, ensuring existing setups weren’t disrupted. Code error checking, debugging, and stabilization proved complex.

Another fascinating task at this stage involved training the AI agent for more “human-like” communication. The bot learned to joke, included emojis in messages, and was perceived as a virtual deputy project manager by the team.

The third phase took approximately 2.5 months.

Digital Assistant Overview

Our product is an AI agent whose functionality is limited only by the modules employed. These modules are easily configurable to align with any corporate regulations, templates, and business processes in IT project communication. There are 11 modules:

-

Contextual Help Module “Help”. Provides project tips, identifies risks, offers solutions to optimize integration, and provides interactive training for new users.

-

Knowledge Management Module “Core Mind”. Organizes and structures all project information. The database is populated automatically, intelligent contextual search is opened, recurring errors are taken on the “pencil”, documents are linked to the relevant projects themselves.

-

Execution Monitoring Module “Pulse Tracker”. Provides 24/7 real-time monitoring of deadlines, costs and resources. This includes deadline reminders, identifying bottlenecks, analyzing causes of delays, task forecasts, cost estimation and budget control.

-

Communication Efficiency Module “Collab Booster”. Responsible for quality and transparency of interactions: assesses completeness of feedback, generates reports analyzing activity, detects and escalates unresolved issues.

-

Issue Management Module “Risk Shield”. Responds promptly to problem risks, warns and proposes solutions, triggers emergency meeting call if necessary.

-

Voice Command Module “Voice Pilot”. Voice control of tasks – their creation, assignment of executors, status clarification, automation.

-

Team Efficiency Module “Team Insight”. Analyzes employee performance, assigns tasks by type and complexity, monitors workload, tracks successful patterns and context switching.

-

Process Optimization Module “Flow Optimizer”. It customizes templates, auto-checks customized rules, suggests improvements based on project history analysis, and builds process change scenarios.

-

Sprint Planning Module “Sprint Master”. Accelerates and optimizes the planning of Agile sprints.

-

IT Integration Module “Flow Grid”. The core module of systems and chat implementation. It provides seamless API integration with key development and communication tools of the IT team, creating a unified ecosystem.

-

Report Visualization Module “Smart Report”. Automatically generates reports, creates charts and graphs, monitors timeline and makes planning recommendations.

The solution integrates with project management platforms Jira, Confluence, GitLab, and messaging apps Slack or Telegram, supporting Webhooks for corporate software interactions.

Final Results

NOXS.AI developed an advanced digital assistant, transforming project management and significantly improving efficiency for IT Project Managers and development leaders. This innovative tool automates routine processes and strategically optimizes the project management ecosystem.

The assistant frees up to 20% of their working time.

With the power of artificial intelligence, the Assistant takes on the tasks of monitoring budgets, deadlines and risks, anticipating potential problems and proposing solutions before they occur. Generating reports, managing workflows and analyzing key metrics now takes seconds, allowing managers to focus on making informed decisions and strategic development.

The implementation of the assistant has not only reduced labor costs for routine processes, but has also significantly reduced the stress level of managers, giving them more time to implement creative and high-level tasks.

The assistant has become an indispensable partner in achieving ambitious goals.

Need to develop a similar project?

Mobile e-wallet application that lets users link their debit and credit cards to their accounts through banking partners, create e-wallets and virtual cards, and use them for money transfers, cash withdrawals, bills and online payments, etc.

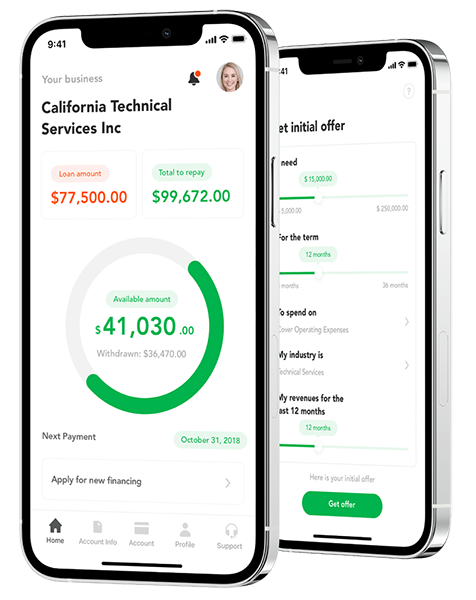

Digital lending platform (and a matching mobile app client) with an automated loan-lending process.

A digital wallet app ecosystem for Coinstar, a $2.2B global fintech company — including mobile digital wallet apps, ePOS kiosk software, web applications, and a cloud API server enabling cryptocurrency and digital asset trading, bank account linking, crypto-fiat-cash conversions, and online payments.