AI-Secretary For the Lab Technician: Rapid Entry of Sample Results By Voice

Sometimes our clients themselves, or more precisely, their unique needs, become our inspiration. One of such projects was launched following a request from a petrochemical company, which wanted to increase the productivity of their lab technicians by introducing artificial intelligence into their workflow.

About the Client

A major enterprise operating within the petrochemical industry, engaged in the large-scale production, processing, and distribution of chemical products derived from petroleum and natural gas, serving both domestic and international markets across various industrial sectors.

Project Background

The reason is that the company’s laboratories cannot be equipped with computers and cannot be equipped with conventional input devices such as keyboards, mice and touchpads. Only explosion-proof devices are allowed. At the same time, the client wanted the lab technicians to be able to enter the results of their research quickly, without having to make manual entries.

NOXS.AI took on this challenge and found a solution. Our task was to create an AI assistant for the lab technician that can be controlled via an explosion-proof voice-activated headset. Dictate sample results and listen to previously entered readings with all information automatically entered into the plant portal.

Engagement Model

Fixed Price

Project Team

Solution architect, 2 .Net, 2 iOS Dev + iOS Tech Lead, 2 Android, 2 QA, BA,PM, Designer, AQA, DevOps, 2 Front-End, 20 members in total

Tech stack / Platforms

Solution Overview

Interaction between a lab technician and an AI assistant

For the client, the solution is a web application running through a browser. Communication with the bot takes place via an explosion-proof wireless headset that is connected to the computer. The code name of the assistant is “Marfusha”. From the technological point of view, it has several components:

-

Vosk “light” modules. They are responsible for recognizing keywords related to activation and deactivation of the assistant.

-

GigaAM from Sberbank (Whisper analogue). It starts working after the assistant is turned on. Its task is to perform high-quality voice transcription, process the received text and organize the basic logic of the entire workflow.

-

TTS technology. It is responsible for voice synthesis, thanks to which the assistant not only understands voice commands, but is also able to communicate with the user and respond to him.

The AI-assistant is launched and starts “listening” to the user after pressing the “Start” button in a special browser tab. Each scenario consists of a chain of sequential actions:

-

Authorization. “Marfusha” asks for a pin code – the lab technician speaks it by voice (four digits), thus confirming the presence of his account in the system.

-

Selection of the protocol under which the experience is conducted. It is also spoken by the user at the bot’s request. The protocol is understood as a set of parameters necessary to record the result: pressure, content of some substance, color, temperature, etc.

-

Sample Selection. This is a numeric value, an index (1, 2, 3, etc.). One protocol can include as many samples with different or identical values as you like. If a lab technician calls a sample number that is not already in the database, the assistant automatically adds it to the system.

-

Entering values. For a new sample, the bot asks you to name the data for the parameters that were set for the protocol. If the ID of an existing sample is called, “Marfusha” speaks the parameters previously entered for it and offers an action to choose from: – “Missing”. The voice command allows entering values for missing parameters. For the assistant to accept them, the user needs to say another command – “Marfusha, stop”. The bot will repeat the values and offer the same three actions to choose from. – “Repeat”. The command allows the laboratory technician to record new values for all parameters of the protocol. To record them – again “Marfusha, stop”. – “Finish”. The assistant saves the entered data in the database and prompts you to select a new protocol. After selecting a new protocol, the above steps are repeated.

-

Termination of work. To make the assistant stop “listening”, you should press the “Stop” button in the browser window. The data recorded in the system with sample results of different protocols can be prepared in the form of reports (PDF, Excel, Word – any format) and then sent to the company’s internal system for processing or to individual recipients by e-mail.

SAMPLE SCRIPT

– Marfusha, start.

Marfusha: Enter the pin code.

– One, two, three, four

Marfusha: The pin code is correct. Please state the name of the protocol.

– External.

Marfusha: Please state the sample number

– One.

Marfusha: Sample number One has been found. Parameters entered: pressure – 65.0, isobutane – 5.0, methane – 67.0. Please select one of the actions: Missing, Finish, Repeat.

– Repeat.

Marfusha: Please name the following parameters: pressure, isobutane, methane.

– Pressure – 15.04, isobutane – 16.5, methane – 4.067. Marfusha, stop.

Marfusha: Entered parameters: pressure – 15.04, isobutane – 16.5, methane – 4.067. Please select one of the actions: Insufficient, Finish, Repeat.

– Finish.

Marfusha: Thank you, the result will be saved. Please state the name of the protocol.

…

The Course Of the Project

An organizational “race”

The planned timeframe for the project was 1 month. However, we had to face a number of obstacles that significantly affected the progress of the work. And most of them turned out to be related not to artificial intelligence in terms of interpreting voice synthesis (which was expected), but to the organization of the technical work of the assistant itself.

We made the first version of our solution as a Windows application running directly on the computer. But it turned out to be too “heavy” and inconvenient to use, which could affect the efficiency of the AI-assistant. That’s why we decided to switch to a web version – the user runs it in a browser.

A “side” task was to negotiate with the company’s data security officers to open ports for the use of WebRTC technology. With its help, we used it to run audio streams between the server and the client in real time. It also took some time to debug WebRTC itself.

The work done by our team included the following steps:

-

Selection of voice recognition and voice synthesis tools. Gradually many of them were replaced by more efficient and convenient ones.

-

Layout of the main elements and modification of the architecture based on the results of the experiment.

-

Refinement and implementation of the business process used in production.

-

Testing and stabilization.

We solved some tasks with the help of external DevOps and WebRTC consultants. The total time for implementing the main stages of the project was 4 months – along with testing cycles, solution search, and optimization. The budget was 23,000$.

“Marfusha” understands chemistry.

To determine the optimal control method and logic for the voice assistant, we needed to “familiarize” it with chemical specifics in detail. In particular, to teach it to recognize:

-

Numbers and fractions (especially decimals, which people can pronounce differently);

-

complex chemical elements;

-

formulas.

For us, such an experience was the first and it was very valuable. The AI assistant understands words and parameters that other voice bots cannot understand – this is the main uniqueness of the solution. Some chemical terms can consist of 15-20 letters – Marfusha can also recognize them. In conditions where only voice is the only input method, automating the recording of such complex data significantly speeds up the workflow.

Another important task for us was to optimize the delay of the assistant’s answers. The problem is that due to the specifics of dialogues, the increasing of speed directly affects the quality of the result. Any user command goes through a certain “pipeline”:

-

Voice Recognition. At this stage, losses may occur when a person speaks too softly or makes word errors.

-

Processing by a large language model (LLM).

-

Decision making.

-

Voice synthesis.

There was a significant pause between the voice request and the AI response. The assistant was silent – the user did not understand whether it was working or not. This affected the comfort of interaction with the bot.

We have done a lot of work to reduce the response latency. Now its speed is 2-3 seconds per message. The attempt to increase it to half a second affected the quality – it remained high only on simple dialogs, common phrases, without complex chemical terms and meanings.

The current goal is to reduce the assistant response latency to zero by adjusting the architecture.

Results

The solution is ready and is undergoing the final stage of debugging on the customer’s side. With the help of our voice assistant, the client’s employees were able to free their hands, solve tasks faster, and eliminate the need to enter data in a notebook – now any information is added to the database immediately by voice.

The lab technician can dictate current sample results, query previously entered values, and correct past results. Artificial Intelligence speaks the information it enters, minimizing the occurrence of errors.

The interface of the web version is intuitive. But we still provided the client with instructions on how to use it and conducted a series of demonstrations. With our solution, the petrochemical company’s laboratories have become technologically advanced and meet modern market requirements.

NOXS.AI plans to work on speeding up “Marfusha” and making dialogues with her more “human”. We will do our best to make using the AI-assistant even more comfortable.

Related Projects

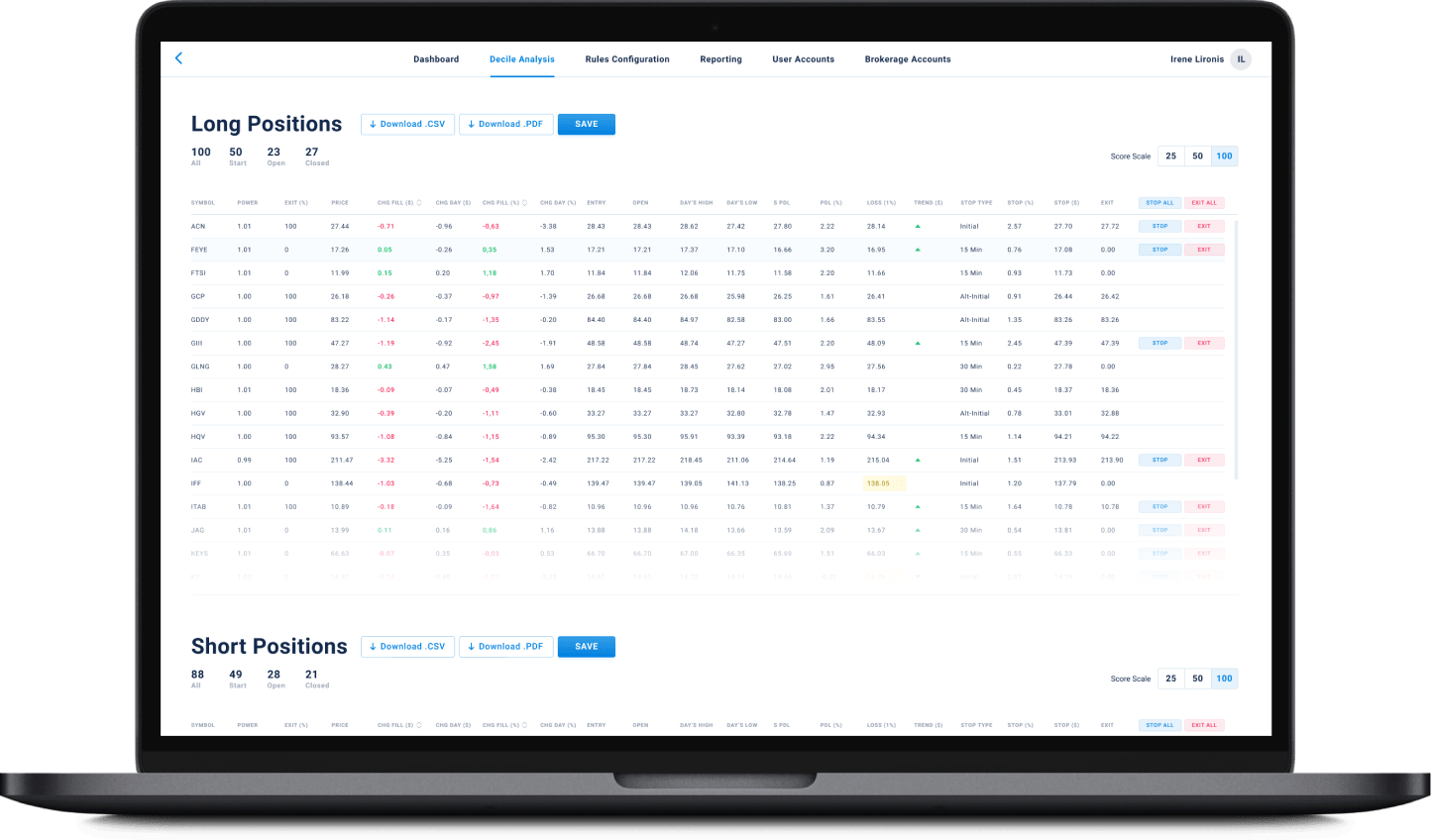

All ProjectsFinancial Data Analytical Platform for one of top 15 Largest Asset Management

Financial Data Analytical Platform for one of top 15 Largest Asset Management

- Fintech

- Enterprise

- ML/AI

- Project Audit and Rescue

AI-based data analytical platform for wealth advisers and fund distributors that analyzes clients’ stock portfolios, transactions, quantitative market data, and uses NLP to process text data such as market news, research, CRM notes to generate personalized investment insights and recommendations.

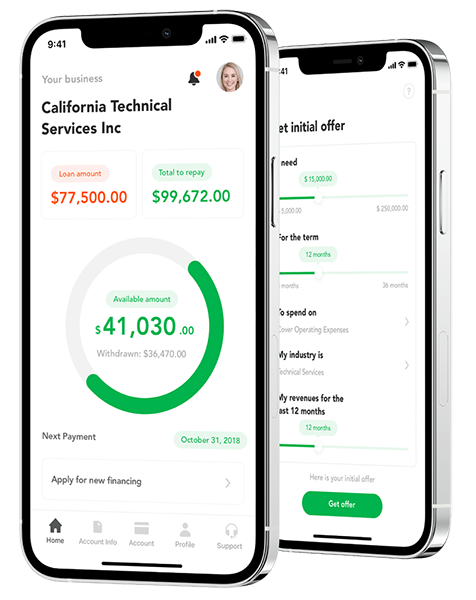

App for Getting Instant Loans / Online Lending Platform for Small Businesses

App for Getting Instant Loans / Online Lending Platform for Small Businesses

- Fintech

- ML/AI

- Credit Scoring

Digital lending platform with a mobile app client fully automating the loan process from origination, online loan application, KYC, credit scoring, underwriting, payments, reporting, and bad deal management. Featuring a custom AI analytics & scoring engine, virtual credit cards, and integration with major credit reporting agencies and a bank accounts aggregation platform.

Contact Form

Drop us a line and we’ll get back to you shortly.

For Quick Inquiries

Offices

8, The Green, STE road, Dover, DE 19901

Żurawia 6/12/lok 766, 00-503 Warszawa, Poland