Large-Scaled Deduplication: Preparing an AI Assistant That Knows Everything About the Company

Our client is a producer and processor of hydrocarbon raw materials. This company needed a chatbot – an AI assistant – to boost employee productivity by accelerating information retrieval and answering any questions about the company. Notably, the client engaged us based on a recommendation, underscoring their trust in our expertise.

About the Client

An entity involved in the exploration, extraction, and processing of hydrocarbon raw materials — such as crude oil and natural gas — transforming them into refined products, petrochemicals, and other commercially valuable energy derivatives.

Project Background

This company needed a chatbot – an AI assistant – to boost employee productivity by accelerating information retrieval and answering any questions about the company. Notably, the client engaged us based on a recommendation, underscoring their trust in our expertise.

The client tasked NOXS.AI with creating an AI assistant capable of providing answers based on all existing corporate documents. This was a significant challenge given the volume and diversity of data: over 100,000 files in various formats including photographs, scans of paperwork, Word documents, Google Docs, Excel spreadsheets, and PDFs.

Engagement Model

Fixed price

Project Team

Solution architect, 2 .Net, 2 iOS Dev + iOS Tech Lead, 2 Android, 2 QA, BA,PM, Designer, AQA, DevOps, 2 Front-End, 20 members in total

Tech stack / Platforms

Solution Overview

What is an AI Assistant The solution for implementing a chatbot into the client company’s Knowledge Base (KB) included:

- Service of updating and knowledge management. The KB and documents are automatically updated. Obsolete and duplicated data are cleaned (deduplication is performed). Changes in documents are tracked with version fixation.

- Assistant API. It consists of the main API for interaction with users and separate ones for: LLM (natural language processing models) which generates answers to queries; Embeddings which work with text vectorization to find contextually relevant information; Databases (Postgres) – for storing documents, indexes and related metainformation.

- Frontend interface on Gradio. User-friendly interface for interaction with both company employees and system administrators. Gradio provides easy customization and use of the GUI without complex development.

- Integration with external applications of the company. It is possible to connect to the client’s internal systems (e.g. ERP, CRM, document management systems, BI-platforms and other corporate applications). Integration via standard APIs or custom connectors is also available.

- Database for storage and processing. Postgres is used for structured data storage. There is an organization of indexes for quick search through the system, including text fields, metadata and digitized documents.

- Ensuring data protection and access differentiation. Access rights to various functions are configured depending on the roles of employees. Data encryption is provided, including interaction between API and database.

The system is thus a modular and scalable solution suitable for integration into corporate processes, with a focus on automation and quality of service for AI-assisted requests.

The Course Of the Project

Step 1. Deduplication

Before the AI assistant could be effective, we needed to eliminate redundant documents in the archive. The same document often existed in multiple versions across the company’s data repository – for example, a policy might appear as a portal publication, an email attachment, and an original signed order. The first step was large-scale deduplication to clean this archive of any repeats and inconsistencies.

We proceeded this process step by step:

- 1. At first, based on a simple hash, we searched for obvious duplicates and eliminated them.

- 2. Then we searched for similar documents and grouped them, including the use of clustering technology. Based on the TF-IDF approach, the initial processing was handled quickly.

- 3. Next, we used clustering to find files that coincide in topics, as well as files that were similar in content, albeit written differently. Those were user instructions for applications, application templates, sample policy briefs, etc.

- 4.The next step was to extract their versions and metadata from the documents. This allowed us to understand which ones were more relevant.

Along the way, we met a few mishaps. In one case, about 15 documents were found which differed in content only in the name of their subdivisions. We had to determine whether they were separate or could be loaded into the database as a single unit. As a result, we created a virtual document that applied to all subdivisions. In addition, we cleaned up duplicate chunks.

Working with tables with complex design was also problematic: a variable number of columns, simultaneous use of vertical and horizontal text, etc. But we coped with it as well.

After deduplication was completed, about 20% of the primary file array remained, i.e. about 20,000 documents.

Step 2. Data Preparation Process

With duplicates eliminated, the next major task was to prepare the remaining documents for use by the AI assistant. At the same time, the documents contained not only text but also images that needed to be recognized and described. It took us 5 working days to complete this task.

Given the volume of files, we divided them into several groups, depending on the original quality. For the sake of understanding, let’s highlight the extreme ones:

-

High-quality texts. This group included documents that were well recognized and did not have a significant number of templates, tables and signatures: instructions, orders, texts without images and with a clear structure. We processed one part of them automatically, the other part – through a fairly simple algorithm based on the BERT architecture, related to the correction of flaws, repetitive characters, tabulation. The recognition of high-quality texts was performed through the Tesseract program. All data were translated into Markdown (a simple markup language, preferred for training large language models) and cleaned up a bit.

-

Low-Quality Documents: These are files with many sparse tables and templates that do not carry information; screenshots with poor resolution or taken from screens (a frequent “companion” of user manuals); photos of paper versions. For processing here, the full range of technologies, visual LLMs and manual review were used.

Server capacity utilized: A100 graphics card, 128GB RAM, 2T HDD.

Final Results

We managed to make the data suitable for direct implementation into an AI assistant. Our team handled an impressive volume of more than 100,000 files in 10 working days. The client’s employees became more productive, decision-making accuracy improved.

In addition to an assistant who can answer absolutely any questions about the company and its rules, regulations, procedures, the client received recommendations on document generation and a deduplication system for the future. We also helped in describing those processes that had not been described before.

NOXS.AI continues to develop and support the AI-assistant created for this client. After the realization of this project on the basis of the deployed LLM, we have implemented other services and believe in further fruitful cooperation.

The goal was not to save money directly:

– speed is more important than savings

– increased productivity (not measured in quantity, rather subjective)

– improved accuracy of decisions due to the ability to quickly turn to an assistant for an answer

Related Projects

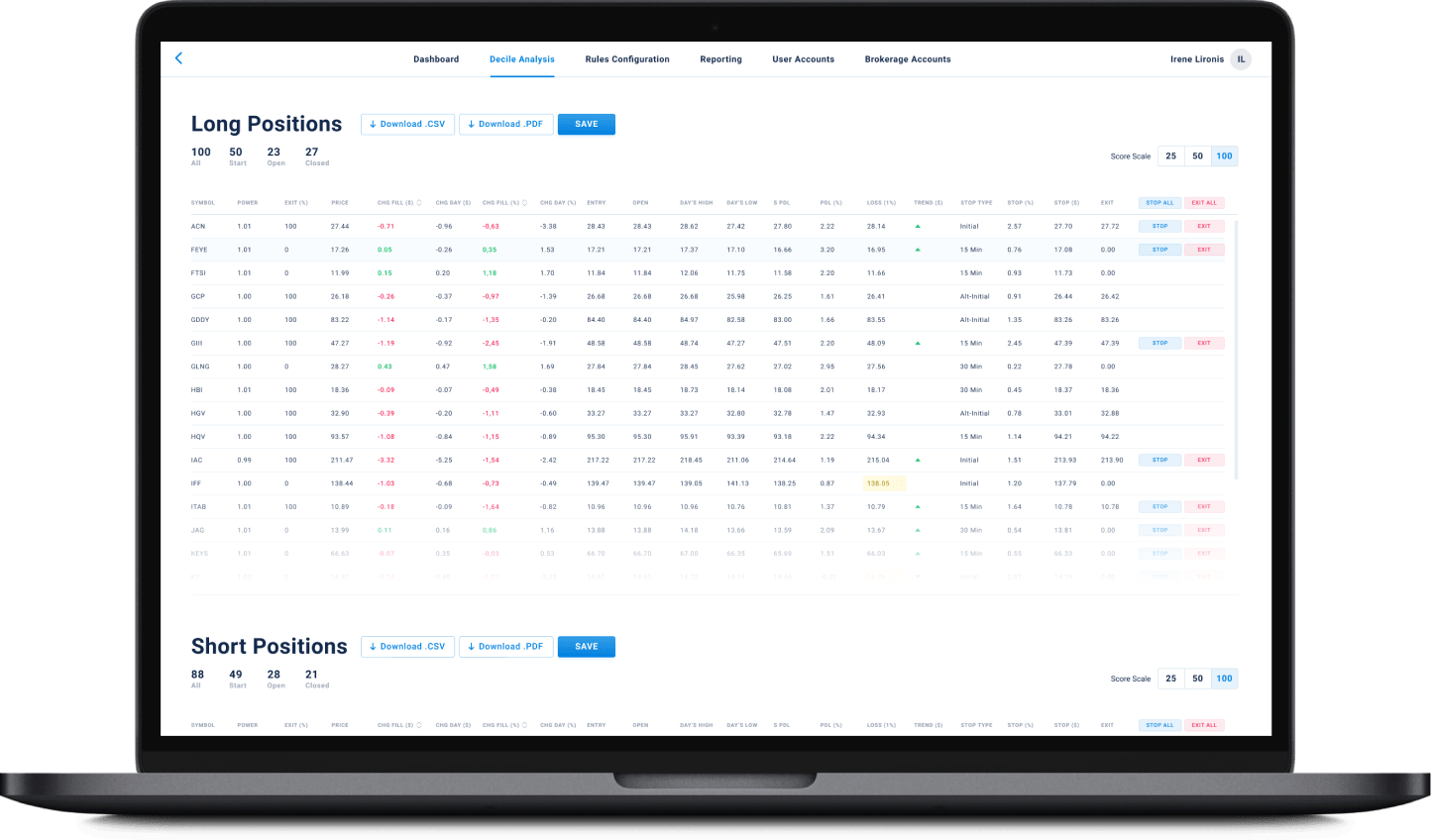

All ProjectsFinancial Data Analytical Platform for one of top 15 Largest Asset Management

Financial Data Analytical Platform for one of top 15 Largest Asset Management

- Fintech

- Enterprise

- ML/AI

- Project Audit and Rescue

AI-based data analytical platform for wealth advisers and fund distributors that analyzes clients’ stock portfolios, transactions, quantitative market data, and uses NLP to process text data such as market news, research, CRM notes to generate personalized investment insights and recommendations.

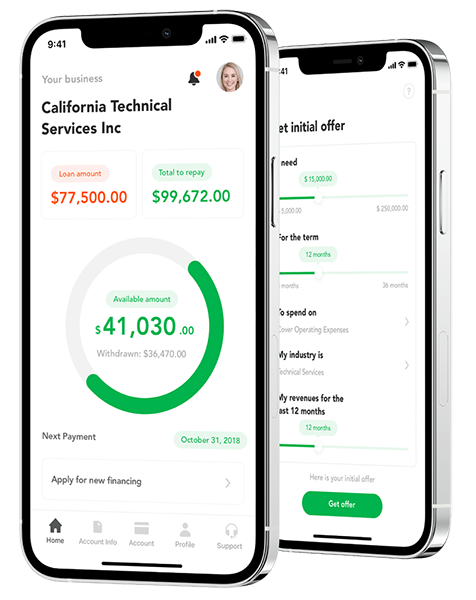

App for Getting Instant Loans / Online Lending Platform for Small Businesses

App for Getting Instant Loans / Online Lending Platform for Small Businesses

- Fintech

- ML/AI

- Credit Scoring

Digital lending platform with a mobile app client fully automating the loan process from origination, online loan application, KYC, credit scoring, underwriting, payments, reporting, and bad deal management. Featuring a custom AI analytics & scoring engine, virtual credit cards, and integration with major credit reporting agencies and a bank accounts aggregation platform.

Contact Form

Drop us a line and we’ll get back to you shortly.

For Quick Inquiries

Offices

8, The Green, STE road, Dover, DE 19901

Żurawia 6/12/lok 766, 00-503 Warszawa, Poland