School Lessons by Checklist: Creating an AI System to Monitor Educational Quality

With a well-organized educational process, lessons in an educational institution start and end precisely at the scheduled time, harmony reigns in the classrooms, and children's phones are taken out of backpacks only during breaks. A network of private schools decided to enhance the quality of their services by delegating the monitoring of these parameters to artificial intelligence.

About the Client

A network of private schools operating under a unified vision and management structure, offering consistent academic standards, individualized learning approaches, and a strong emphasis on student development.

Project Background

The idea of introducing certain checklists with sets of requirements for automatic verification was borrowed by the CEO of the network from his previous experience in the hospitality business. There, by involving an employee who manually filled out compliance forms daily, service was elevated to a new level in just 1.5 months.

The CEO aimed to implement a similar scheme in the network of schools and kindergartens, only fully automating it to save on man-hours. Developing an AI system capable of analyzing lesson quality based on video camera recordings was entrusted to us.

Engagement Model

Fixed Price

Project Team

AI data scientist, consultant, ML expert — one individual; Python ML engineers — 2-3 specialists depending on project phase; Project manager; QA specialist; Business analyst.

Tech stack / Platforms

Stage 1. Hypothesis Testing and Pilot Launch

Before creating an interim solution, we needed to verify whether large language models could effectively detect lesson video recordings on visual and audio aspects according to a checklist. The main challenge was dealing with an extremely limited dataset—we could only use several approved lesson videos, as images of children directly fall under the federal personal data protection law.

The future AI system was required, for example, to “see” phones in students’ hands, “hear” raised tones from the teacher, and “notice” tardiness. A significant aspect of verification was evaluating only those models that did not require large investments in retraining and could be hosted on a closed server with limited resources—around 40 GB of RAM. Choosing a local server was also justified by the necessity to protect personal data of students and teachers.

Our team included:

-

AI data scientist, consultant, ML expert—one individual;

-

Python ML engineers—2-3 specialists depending on project phase;

-

Project manager;

-

QA specialist;

-

Business analyst.

The client also involved a team:

-

Methodological department employees—helped create requirement checklists and assess AI system evaluation results;

-

IT specialists—handled direct interaction setup between the AI and surveillance cameras;

-

Lawyers—advised on actions’ compliance with personal data laws.

Within the pilot project, we swiftly managed class schedule processing. The first report was also created easily—a text file without a clear structure serving to demonstrate that the system could generally identify classroom activities.

The greatest difficulty was selecting three AI models most effective for certain parameter detections:

-

For diarization—voice stream processing with speaker identification. We tested three options before selecting the suitable one, aiming for high quality without excessive server load.

-

For visual event and object detection—via video frames. About 10 different models were tested. We sought an “intelligent” model capable of accurately reading images yet compact enough for available resources.

-

For emotion recognition—also via video frames. Selecting this model was simpler. It only required proper configuration and optimization for a closed server.

Together with the methodological department, two checklists were developed: one for all network schools, another for kindergartens. Each included parameters that the AI system evaluates based on the data obtained through language models, marking accordingly. Parameter examples:

-

Lesson started within 2 minutes of scheduled time;

-

Students did not use smartphones during the lesson;

-

No students were late;

-

Lesson ended timely, meaning children started packing backpacks 2 minutes before the end, etc.

The piloting and hypothesis testing process took about 2 months in total.

Stage 2. System Division into Microservices

To scale up, we moved to a more powerful server. The task was to deploy the same language models and maximize their capabilities. To transform the pilot into a scalable, production-ready system capable of greater workloads, we divided our development into four distinct microservices:

-

Diarization microservice. Receives audio streams from cameras, transcribes speech into text, and divides it into utterances from teachers and students, with all students considered as one speaker, and the teacher as another.

-

Microservice as a locally deployed visual language model. To save server memory, it stores recorded videos as frames rather than whole files. Recordings now stream directly from cameras via RTSP protocol. This interaction was quickly and smoothly set up.

-

Emotion detection microservice. Also receives video frames, using them to determine the average emotional climate in the classroom: joy, fear, anger, constraint, etc.

-

Orchestrator microservice or data processing pipeline. Automates camera recordings according to a schedule now stored in the database as a large Excel file, coordinates other microservices’ operations, and processes checklist verification results.

An OpenAI interface was used in development, enabling future replacement of services at any time.

During setup, we encountered logical errors due to the specifics of certain lessons and classrooms. The system performed checks and marked results normally, but manual verification revealed inconsistencies.

We identified three school subjects requiring specialized checklists:

-

Foreign languages—due to multilingual transliteration needs. Children and teachers switch between Russian and the studied language, often making significant pronunciation errors, complicating language model processing.

-

Computer Science and Art—due to atypical classroom arrangements and room specifics. Cameras might point at students’ backs or be too far, severely reducing audio recording quality and making emotion detection impossible.

-

Physical Education lessons were considered separately due to seasonal location changes and overall requiring unique approaches.

To resolve camera issues, lists were created for cameras needing firmware updates or replacements, plus additional camera installation sites. The methodological department took charge of refining checklists for analytically challenging subjects. Video transmission quality issues due to slow internet were addressed by installing additional Wi-Fi receivers.

Stage two work also took around 2 months.

Client interaction with the AI system

Our solution collects data from cameras, processes it, and uses results to verify checklist parameters. How it works:

Example

Class 9A starts a math lesson in auditorium 135.

The orchestrator microservice connects to the classroom camera, records the 45-minute lesson, then forwards the recording for processing by other microservices.

These microservices handle audio/video streams, create frames, and store results in the database.

Then the orchestrator queries the three AI models for specific checklist parameters. Responses come as lists marked with “+” or “-”.

If all checklist items are marked “+”, it’s a 100% fulfillment—an ideal lesson. Marks of “-” reduce this percentage, signaling lesson shortcomings.

School administrators track metrics dynamically by classes, teachers, and subjects, but not through our system. A separate client team uses a complex Power BI database, integrating our checklist reports to create graphs, dashboards, tables, and reports.

We showed the client’s system administrator essential monitoring tools and an admin control panel. Methodological department training and convenient result exports were prepared. Detailed architectural documentation was provided to the client’s data integration team, along with brief consultations.

Future Improvements

Our AI system’s feature is automation, simulating someone marking checklist items outside classrooms. The computer autonomously performs this, saving countless man-hours.

Currently, we only note incidents. Future plans include measurement features, supporting verification results with materials like precise timestamps or frames when events occur.

The project is currently in production implementation, with substantial work expected for another 3-4 months. Our team is committed to success.

Related Projects

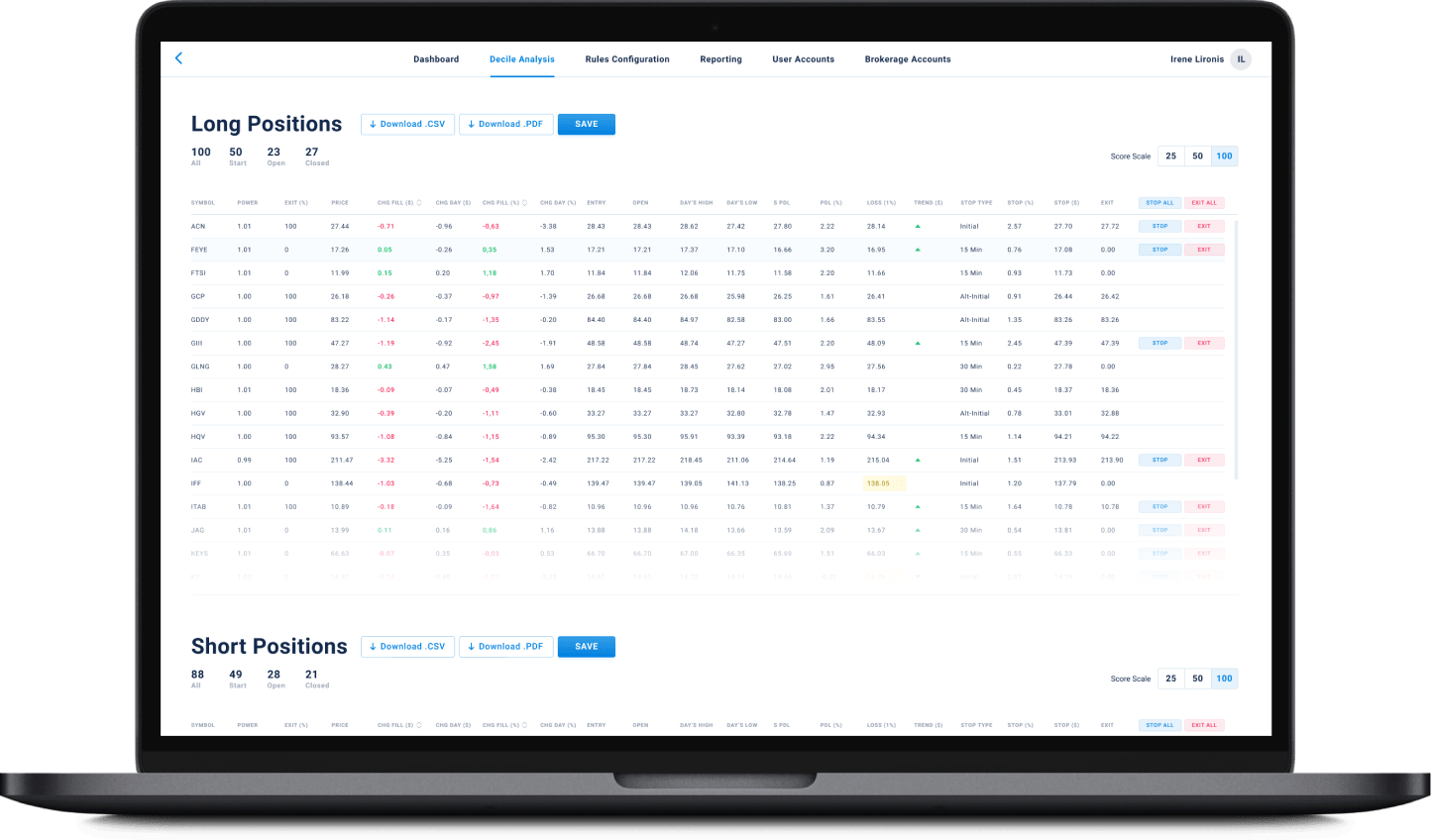

All ProjectsFinancial Data Analytical Platform for one of top 15 Largest Asset Management

Financial Data Analytical Platform for one of top 15 Largest Asset Management

- Fintech

- Enterprise

- ML/AI

- Project Audit and Rescue

AI-based data analytical platform for wealth advisers and fund distributors that analyzes clients’ stock portfolios, transactions, quantitative market data, and uses NLP to process text data such as market news, research, CRM notes to generate personalized investment insights and recommendations.

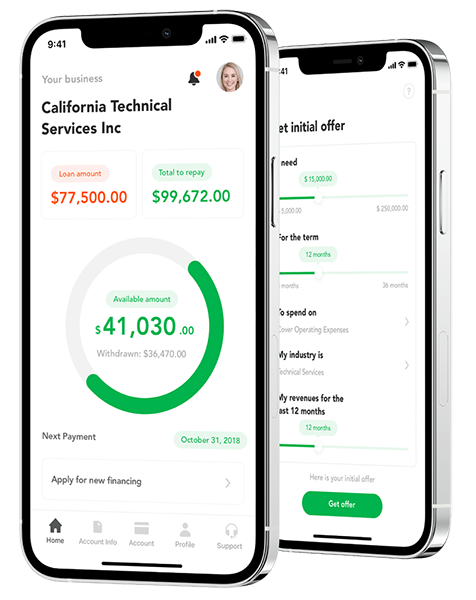

App for Getting Instant Loans / Online Lending Platform for Small Businesses

App for Getting Instant Loans / Online Lending Platform for Small Businesses

- Fintech

- ML/AI

- Credit Scoring

Digital lending platform with a mobile app client fully automating the loan process from origination, online loan application, KYC, credit scoring, underwriting, payments, reporting, and bad deal management. Featuring a custom AI analytics & scoring engine, virtual credit cards, and integration with major credit reporting agencies and a bank accounts aggregation platform.

Contact Form

Drop us a line and we’ll get back to you shortly.

For Quick Inquiries

Offices

8, The Green, STE road, Dover, DE 19901

Żurawia 6/12/lok 766, 00-503 Warszawa, Poland