The Story of AI Database Navigator: Increasing Employee Speed And Putting Documents In Order

The project to create an AI-based first-line customer support assistant for a manufacturer of complex agricultural equipment was the result of a new business acquaintance. The solution came with the realization of a problem: the company's employees had to spend too much time searching for information and writing standard answers. Our client understood the scale of the task, taking into account the specifics of their initial data, and was willing to wait more than a year. We managed to complete the bulk of the work in 2 months.

About the Client

A specialized industrial enterprise engaged in the design, engineering, and manufacturing of advanced agricultural machinery and equipment, intended to support large-scale farming operations through enhanced efficiency, precision, and technological innovation.

Project Background

How does AI navigator work

The proposed solution was an artificial intelligence system based on Retrieval-Augmented Generation (RAG) technology to efficiently search and provide answers to users’ questions using a database of documents.

This system has included an LLM (large language model) that generates responses. Only instead of relying solely on the model’s trained data, it additionally pulls in relevant information from a document database. Two key steps can be distinguished here:

-

Search for relevant information. The user makes a query (e.g., through an interface). RAG algorithms search the document database for answers, selecting the most relevant fragments.

-

Response Creation. Using the information found, the LLM generates a text response that is accurate, understandable, and meaningful.

RAG ensures the relevance of the answer, because the system works not only with a pre-trained model, but also with a specific database (documents, reference books, instructions, etc.). And even if the LLM does not have information about the requested item, it will pull it up from that very database.

Engagement Model

Fixed price

Project Team

Solution architect, 2 .Net, 2 iOS Dev + iOS Tech Lead, 2 Android, 2 QA, BA,PM, Designer, AQA, DevOps, 2 Front-End, 20 members in total

Tech stack / Platforms

Solution Overview

Examples of the use of our solution:

-

Corporate chats. Employees can ask questions about internal processes (example: “What are the current business travel instructions?”) – the system will provide an accurate and up-to-date answer.

-

Customer Service. The system works as an intelligent chatbot providing answers to user questions based on company documentation.

-

Legal or technical advice. AI Navigator quickly finds the information you need by referring to internal or public documents to provide accurate and complete answers.

Benefits of the solution:

-

Interactivity. The users feel as if they are interacting with a “smart assistant”.

-

Integration. API makes the solution flexible: it is easy to integrate it into the company’s current ecosystem.

-

Time saving. Quick access to large amounts of information.

-

Flexibility. At the initial stage, Gradio allows you to conveniently use the interface, which can later be replaced with a custom interface for the company’s needs.

This solution is almost universal, as it can be adapted to any process where quick retrieval and clarification of information is required.

Now back to the case.

The Course Of the Project

Information extraction

Collecting data from more than 300,000 files for further processing was not easy. About 70% of them were duplicates, as well:

-

The files were hosted on different sources and were presented in several formats: pdf, doc, docx, txt, xlsx, pptx and others. In particular, we had to extract information from the company’s YouTube videos, as the client did not have their originals on local media. We automated the process of downloading and transcribing audio directly from the video hosting site, after which we submitted the results to LLM to put the text in order and split it into logical blocks. It also required reading information from technical documents (e.g., instruction manuals), analyzing about 25,000 first-line employee dialogues and dictated recordings.

There were many tables in the documents, which are not well understood by AI. We made separate promts and scripts to convert them into easy-to-read code for the machine. As part of the integration of the RAG system with the AI navigator, the formation of a database of promts and scripts allows information from the knowledge base to be adapted into a structured form that can be easily processed by a machine. Here are three examples of such promts and scripts:

Example 1: System access questions Promt for AI: A script for first-line employees:

Greeting and clarification of the problem:

“Hello! I’ll help you figure out your access. You can’t log in, right? Tell me what you see on the screen when you try to log in.”

Finding out the context:

“Please clarify: are you using the correct login? Does the error message, if any, contain text or code?”

Standard checks:

“Let’s try the next steps:

-

Make sure the keyboard is switched to the correct language.

-

Check if the Caps Lock key is enabled.

-

Change your device or browser and try again.”

If the problem is not solved:

“If nothing helps, I will send you instructions to reset your password. If the problem persists after that, I’ll forward the request to technical support.”

Example 2: Requesting information from the corporate knowledge base

Promt for AI:

A script for first-line employees:

Employee Instruction:

“To get an answer to your question through the knowledge base, you can use a voice query. For example, say: ‘Where can I find instructions for working with the CRM system?’ or ‘Show help on the topic of accruals.’”

Formalizing the request:

“When you request information, try to be as specific as possible. For example, instead of ‘How does the system work?’ it is better to specify: ‘How do I change customer data in CRM?’”

Response to request:

“The system provides step-by-step instructions. If the problem persists or no information is found, you will be prompted to contact technical support or go to a second help line.”

Example 3: Handling a dictated complaint about a technical problem

Promt for AI:

A script for first-line employees:

Extracting key information from a query:

The AI navigator automatically highlights key points:

-

Date and time of failure: “For example, the user reported, ‘The system stopped working on May 15 at 2:30 pm.’”

-

Problem description: “For example: ‘An error with code 503 appears when loading data.’”

Steps to fix the problem:

“Check with the client:

-

Have you tried rebooting the system?

-

Does the problem manifest itself only with you or with other colleagues?

-

Can you try a different network (such as mobile internet)?”

Transmitting information to the next line:

“If the problem persists, submit a request to technical support with the following information:

-

The date and time of the failure.

-

A description of the action that caused the failure.

-

Error or error code, if any.”

These examples demonstrate how you can handle user requests, format them in an AI-friendly format, and provide first-line employees with easy-to-understand scripts to communicate with customers and solve their problems.

-

There was a difficulty with partitioning the extracted information into semantic blocks necessary for feeding knowledge into the context window – it was desirable to make them complete for the use of RAG technology. There are several approaches to partitioning (by number of characters, by delimiter character, division using LLM, based on semantic analysis/proximity, etc.), but none of them could provide the necessary quality. Therefore, at first we had to split the information into rather large blocks (2-3 thousand characters), according to the formatting and with an attempt to preserve the meaning, and later – into smaller chunks of 500-700 characters. Those are more suitable for searching, besides, there are restrictions of some embeddings on text length for vectorization.

-

Since the assistant had to have up-to-date information, in parallel we automated the container to automatically update the knowledge base.

The whole stage took about a week and was completed successfully. From server technologies we used Docker for containerization and vLLM for LLM inference.

Working on “gaps”

In the next step, we derived benchmark questions and answers that were implemented into the AI assistant base. Using LLM, we evaluated the chatbot system using a number of metrics:

-

language;

-

completeness of answers;

-

their accuracy, etc.

The accuracy of answers to test questions is 90% or higher, which is necessary for the assistant to work properly. We were unable to achieve it and understand what was going on until we started reviewing the data manually. Next, we automated the process of assessing the contextualization of the reference questions based on the same reference answers.

It turned out that the reference answers were not contained or not fully represented in the source files provided by the company. To solve the problem on the client’s side, a lot of work was done to complete and update the regulatory documents. Putting the data in order became useful not only for our further work on the assistant, but also for the customer himself.

Intensive work to close the “gaps” took about a month. The result was a 92% response accuracy rate.

Expanding the context

A significant problem in testing benchmark questions was the lack of information put into them. The way a person formulates and understands them can make a huge difference. As a result, there are skews and inconsistencies between questions and answers. In addition, in some places there was missing or contradictory information in the documents.

Most often, the user will ask a question as briefly as possible, at the keyword level, as if they were typing it into a browser search box. The context may not be read, although it is the context that is most valuable.

In order for the chatbot to understand the questions “more broadly”, we taught it how to decipher, expand and supplement them. We compiled special examples and samples, enriched the data with content:

-

who the user is;

-

what his search history is;

-

What he might be interested in, etc.

The technique is called Few-Shot. Using it, we selected previously decoded “questions-answers”, fed them in the form of LLM Prompts and asked it to construct full questions by analogy. This allowed the AI to better understand the context and provide more complete answers.

Results

Despite the difficulties, the NOXS.AI team carried out high-quality data preparation and implementation into the assistant. The company received our finished product – an AI navigator for the Knowledge Base, whose data processing and storage we also helped to systematize. Visits to the corporate portal and Wikipedia dropped by about half in the first few months – there was no need for them, as the bot provided answers faster and with acceptable accuracy.

Along with the speed, the productivity and accuracy of decision-making have increased. Thanks to our cooperation, the company’s regulatory documents were put in order, including their content. The client was satisfied. Soon after the project was completed, he asked us for help in developing other services.

Related Projects

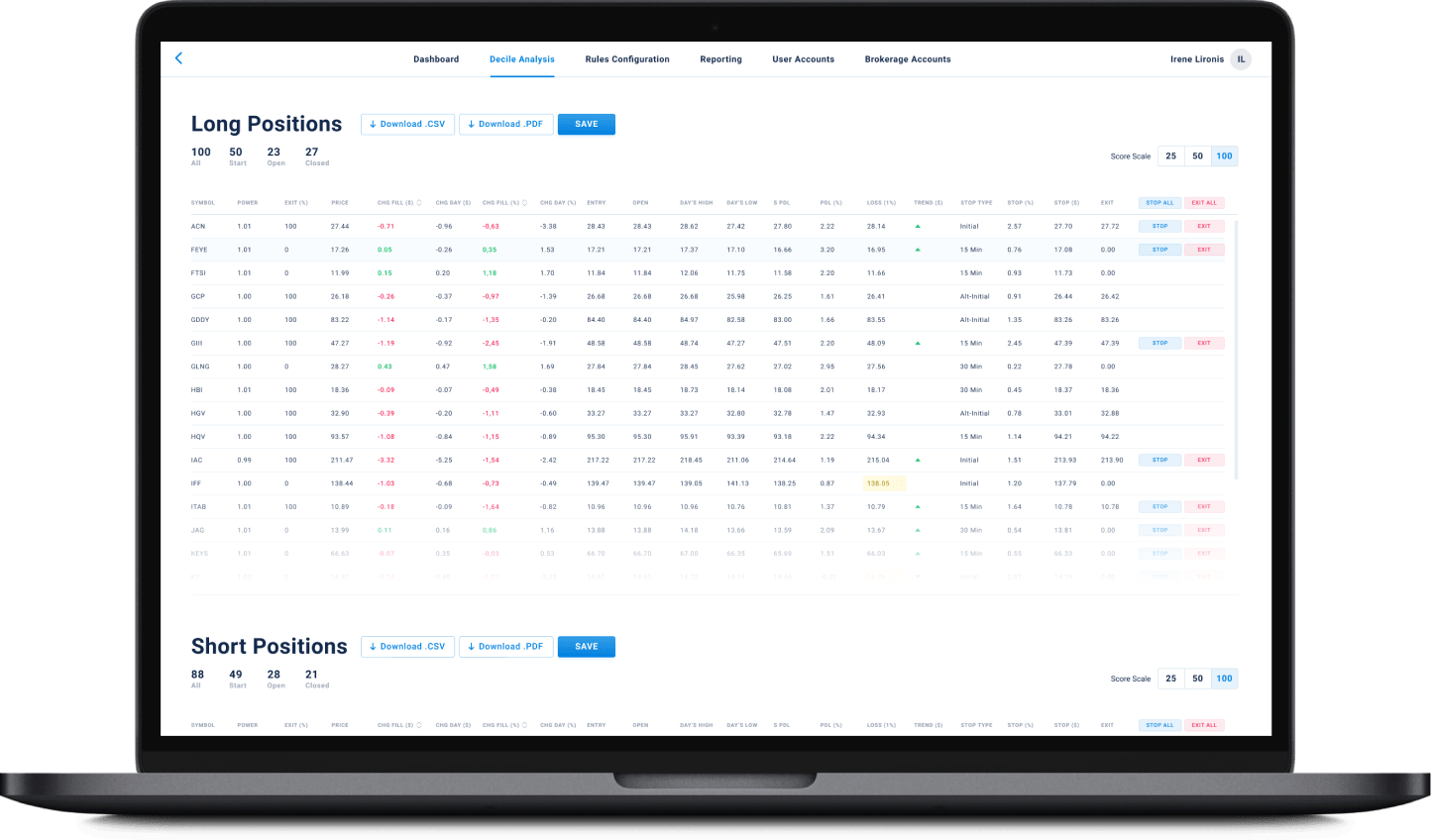

All ProjectsFinancial Data Analytical Platform for one of top 15 Largest Asset Management

Financial Data Analytical Platform for one of top 15 Largest Asset Management

- Fintech

- Enterprise

- ML/AI

- Project Audit and Rescue

AI-based data analytical platform for wealth advisers and fund distributors that analyzes clients’ stock portfolios, transactions, quantitative market data, and uses NLP to process text data such as market news, research, CRM notes to generate personalized investment insights and recommendations.

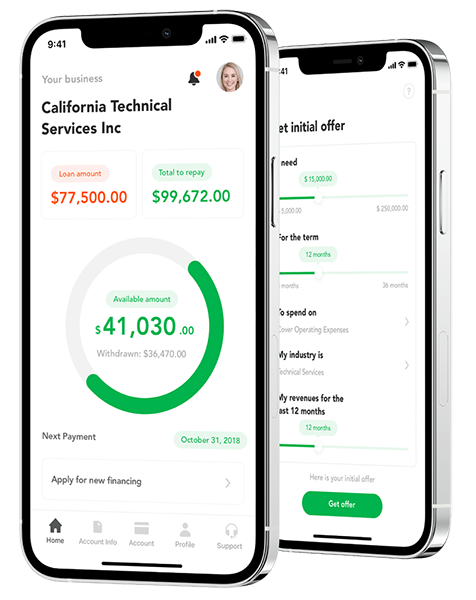

App for Getting Instant Loans / Online Lending Platform for Small Businesses

App for Getting Instant Loans / Online Lending Platform for Small Businesses

- Fintech

- ML/AI

- Credit Scoring

Digital lending platform with a mobile app client fully automating the loan process from origination, online loan application, KYC, credit scoring, underwriting, payments, reporting, and bad deal management. Featuring a custom AI analytics & scoring engine, virtual credit cards, and integration with major credit reporting agencies and a bank accounts aggregation platform.

Contact Form

Drop us a line and we’ll get back to you shortly.

For Quick Inquiries

Offices

8, The Green, STE road, Dover, DE 19901

Żurawia 6/12/lok 766, 00-503 Warszawa, Poland